Machines of Loathsome Gaucherie

The generative AI hype cycles never seem to end. But who or what is any of this slop for, anyway? And what does it say about the taste and judgement of those who make it possible?

Anthropic's CEO Dario Amodei puts an optimistic spin on AI in his popular blog post "Machines of Loving Grace." Should we buy the hype? In this first of a series of posts on the social, cultural, and political impacts of AI, I offer some reflections on how text and image generators are shaping digital culture, in part by acculturating us to a convenient, distracting, undemanding, and often cruel aesthetic of glossy mediocrity.

Like many people, I’ve been thinking about generative AI a lot. Maybe too much. I would like, in all earnestness, to think about anything else.

But I’m a higher education professional who teaches and researchers, amongst other things, media. And as any exasperated teacher wading through mediocre machine-generated coursework will tell you, this stuff just won’t seem to go away. Indeed, its output—commonly described as slop—is only proliferating online at alarming rates.

It’s no longer possible to open a social media app without being immediately inundated with a flood of AI-generated images and videos. Clear (and eminently brainless) genres begin to emerge: “AI draws different nations as XYZ,” deep-faked celebrity porn, and horrifying fantasy creatures being only some of the most commonplace.

Now, it’s important at the outset to note that content generators are by no means the end-all-be-all of AI technology. Indeed, it is difficult to talk about “AI” in a way which is meaningful to the majority of normal people, as the term actually encompasses many branches of knowledge, areas of research, disciplines, fields, and so on. All of these in turn deal in a veritable host of practices, strategies, and technologies with names which, to most people, sound like unintelligible jargon: symbol manipulation, machine learning, neural networks, pattern recognition, diffusion, generative pre-trained transformers (the GPT in ChatGPT), and so on and so forth. If all of that sounds like gobbledygook for you, that’s fine; it’s not really important for most people in their daily lives to have much more than a general idea of what these things are.

What is far more important, and far less studied, is what the penetration of these technologies and their outputs into our daily lives is doing to us, our cultures, our societies, and our politics.

In today’s post, I will focus explicitly on AI text and image generators and the impact they are having on our cultures and, by extension, the acculturation of our tastes. But first, I want to make a quick detour through the words of a man who has positioned himself as a leading voice in AI ethics and safety: Dario Amodei.

From Doomer to Booster: “Machines of Loving Grace”

Recently, I finally found the time to sit down and peruse Dario Amodei’s blog post “Machines of Loving Grace.” Amodei is the co-founder and CEO of Anthropic, a rival to OpenAI which supposedly sets issues of AI safety and ethics at the core of its products. Its chatbot, Claude, is often celebrated for being a more elaborate in its responses than its competitors, and is thus favored by many in the intelligentsia. Amodei is (in)famous for communicating a “P-doom” (an estimation of how likely it is that AI leads to destructive outcomes) of 10-25%. That’s high; if you were inventing something which had a 10% chance of wiping out humanity, would you continue to work on it?

Given that, readers may be surprised to find that “Machines of Loving Grace” is not a doomer’s manifesto; rather, it reads like something between marketing copy and a Wikipedia article (making me wonder how much Amodei farmed out the actual writing to his own Chatbot). We have chapters which are heavily given over to bullet points which mostly cover speculative predictions as to how AI will, in Amodei’s estimation, in fact help to solve problems related to health, economics, politics, governance, and work.

Read generously, the blog post is a basic primer enumerating a wish-list of things most AI engineers would like to see their work eventually help to accomplish. (I refuse to call it an essay—it’s not nearly ambitious enough in either style or content for that august designation.) Read skeptically, it’s boosterism; an attempt to gin up financial and public support for technologies which will likely only be used to push even more commercial products, advertisements, and time-wasting diversions into our faces. If you haven’t guessed already from the title of this post, I suspect that the former may be possible, but that the latter is the more probable outcome.

It’s not that I think that Amodei and others are insincere; it’s that I don’t think they actually have a deep enough understanding of the issues and needs they want to tackle in order to actually address the problems they enumerate. Moreover, I suspect that they are blinded to some degree by their own interests (aren’t we all?). Finally, I believe that they are too beholden to the dictates of capitalism (and worse: VC funding) to see and act without bias. This financial dependence forces them to gin up constant media attention so as to attract capital and users; thus, the constant arms-race of perpetually releasing marginally better “models” of the two most commercially popular applications of these technologies: Chatbots and image generators.

Now, I think there are a lot of potentially useful scientific applications for generative AI. It could speed up biomedical research and help us discover new treatments for diseases. It can serve as a useful interface for brainstorming or bouncing ideas around. There is even some evidence that it can be usefully deployed as a tutor or teaching assistant.

Moreover, Amodei’s company, Anthropic, is far from the worst offender on the generative AI market. For example, Claude doesn’t (yet) have an in-built image generator, although I am willing to bet that this has less to do with ethics or safety and more to do with the prohibitive cost of providing this high-computation service.

That said, Anthropic is both a spin-off and direct competitor of OpenAI; once-upon-a-time, OpenAI CEO Sam Altman also used to put a premium on “ethics and safety” — at least, rhetorically. Now Atlman is running full-speed towards the profit motive. One way to read “Machines of Loving Grace” is as Amodei gravitating towards Altman’s position. It isn’t really important what one individual player in this industry is or isn’t up to at any given moment. What’s important is the network of incentives they are embedded in, how they understand themselves and their mission as a class, and what their products actually do when introduced into the world.

Indeed, there are real problems which seem built into the very nature of these products. These make their usage more problematic and risky than most people are aware.

The Trouble with Chatbots and Image Generators

It is by now fairly well-known that the writing GTP Chatbots produce are not only frequently beset by inaccuracies and blatant, sometimes embarrassing falsehoods (“hallucinations” in AI jargon), but that they also mostly produce uninspiring text.

This is of course not surprising, given that they are trained mostly on material from the open internet; their default style is thus the lowest common denominator of search-engine optimized content-farm and marketing copy. (This isn’t to bash on on the writers who produced that copy, by the way; copywriters are restrained by the preferences built into search engine algorithms as well as the poor literacy levels of broad swaths of the general public.)

Sure, if you collect a specialized corpus and re-train a chatbot on data lifted from, say, books of poetry and then prompt it very well, it may produce interesting, and sometimes haunting, verse. (Interestingly, the title of Amodei’s blog post is itself lifted from a sci-fi themed poem—which, to his credit, he cites in a footnote, and to which we will return in the final section of this post). The problem, however, is that much of that verse tends to be lifted sometimes line-by-line from actually existing canonical works, resulting in curious mash-ups at best or full reproductions at worst. Indeed, there is a known problem of AI text generators plagiarizing all kinds of works; in one case the AI platform Perplexity plagiarized articles from Wired magazine, which—get this—criticized Perplexity for plagiarism. You can’t make this stuff up.

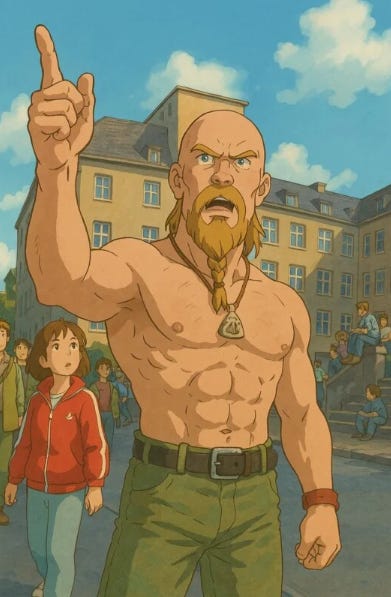

Similarly, the other most popular kinds of generative AI applications are image and video generators. These are getting quite good, capable of producing increasingly high-quality images ranging in scope from the photorealistic to the highly fantastic. Most recently, you’ve probably noticed the avalanche of memes and images uploaded to Open AI’s new model and converted into anime frames in the style of the beloved anime production company, Studio Ghibli.

The famed cofounder and head of Studio Ghibli, Hayao Miyazaki, has expressed deep annoyance not only at this current crop of images, but also the entire project into which text and image generators enlist us. He has said that, “Whoever creates this stuff has no idea what pain is whatsoever. I am utterly disgusted… I strongly feel that this is an insult to life itself.” Open AI has since said that they have cracked down on Ghibli-styled work, yet I have no doubt that people will soon find ways to get around their guardrails.

Fans have similarly expressed disappointment and outrage:

I confess to having mixed feelings on this issue; there is no doubt that text and image generators have useful applications, but usefulness is not the same as fairness. On the issue of fairness, however, those of us who have unknowingly contributed to these training sets (which is virtually anyone whose ever posted anything to the internet) do not have the institutional support or insider access to claim credit let alone receive any form of recognition or recompense for our contributions, however meager in comparison to a beloved and prolific artist like Miyazaki. If these technologies are trained on our common cultural heritage, isn’t there some justification for their common usage, provided that they are used with responsibility and sensitivity?

All of this in turn raises a question:

Just Who Owns the Means of Meme Production?

Rendering your family portraits or vacation photos in Ghibli’s signature style may sound charming, but keep something in mind: OpenAI has no intellectual property rights to any images produced by Studio Ghibli. This means that they almost certainly copied those images and fed them into their training algorithms in order to achieve this capability without permission of the copyright holders. That, too, is not surprising; almost all of the material used to train these systems was scrapped from the open web and other digitized sources without the permission of creators. If you’ve ever posted on DeviantArt, uploaded family photos to the open web, or even just wrote a Google Maps review, this means you, too. Indeed, many publication outlets, most notably The New York Times, have initiated lawsuits against OpenAI and others for copyright infringement for using this content without permission.

I’m no legal scholar, so I’ll leave it to the lawyers and the courts to suss out what this means in terms of intellectual property law. Suffice it to say that even if it can be, something advertising itself as a “Machine of Loving Grace” should not be built on stolen data. That said, AI companies have since made deals with publishers and web applications for use of their data; noticeably, however, these deals don’t generally include remunerating users for their data. This, because applications generally already force users to agree to the commercial use of their data when they accept a platform’s terms and conditions.

It’s their digital world, you’re just data in it.

My larger concern here however is not with the legality of these applications’ training or output, but rather the sheer volume at which they are currently circulating in highly frequented digital spaces, and what this may mean for the expectations we begin to fashion for online culture.

Crowding Out & Mucking Up

I might sound old and stodgy (I’m really putting the “elder” in Elder Millennial here), but I don’t think that any serious person can at this point deny the fact that digital technologies have failed to deliver for us as a culture.

People read fewer books than ever before, and literacy levels are plummeting; most Americans cannot read above an eight-grade level, let alone parse a sentence from William Faulkner’s Absalom, Absalom! “Prestige” Television series are increasingly produced with the express purpose of being easy to ignore, as it is presumed that most viewers will be distracted by second screens. Cinema, long losing out ticket sales in an era of on-demand streaming, finds itself increasingly beset by trouble. Hollywood seems to be struggling generally, and everyone from actors to screenwriters to cameramen fear that they will be replaced with the creation of algorithmically generated video. Even social media influencers and podcasters fear being replaced by AI-generated personalities.

Entertainment and advertisement has long been eating into the time we used to spend appreciating art and reading literature. Long have stodgy critics bemoaned the success of the popular at the expense of the profound. Now even fluff reality TV series are getting crowded out by a proliferation of visually striking yet ultimately shallow AI-generated “content.” The only other up-and-coming pastime which seems to be capable of challenging it for our increasingly scarce and distracted attention are pay-to-play video games, online gambling, and sports betting.

Most recently, a series of reports have noted that image generators in particular have become favorites of influencers on the far right, who are using it to flood the zone with not just bullshit, but AI sludge. This led The Atlantic to announce that “The MAGA aesthetic is AI slop.” This has been used to further disinformation campaigns, but also to advertise real-world cruelty, as when the Trump White House posted a meme based on photos of an arrest of a weeping Dominican immigrant in the aforementioned Ghibli filter:

I cannot speak to the credibility of the allegations against this detainee, although I will note that there is good reason to be suspicious of anything this White House says or does given their recent flouting of legal procedure. They’ve been illegally targeting legal residents with no criminal histories, and have made no secret of their intention to deport even legal Green Card holders whose speech they just don’t like. They’ve even begun revoking the visas of hundreds of international students.

In some sense, though, whether this person has broken the law or not is beside the point: the use of the Miyazaki filter is in itself abjectly tasteless, and there is no reason to advertise such a thing other than to normalize cruelty and stoke the flames of xenophobia amongst the MAGA base. But then again, I’m also more than willing to believe that those who lack good taste are probably also more prone to engaging in unnecessary cruelty “just for the lol’s.”

Nowadays, great art gets increasingly crowded out by high levels of either mind-numbingly pleasant or gratuitously offensive AI-generated sludge. Some of it may be charming, but none of it is really challenging. Over time we become acculturated to it, which deteriorates any sense of sound aesthetic judgement or good taste. This, I’d wager, also degrades our political sensibilities and a sense of social solidarity, something I hope to come back to in future posts.

I do not want to deny the fact that AI technologies can find their place in great works of art; technologies are tools and mediums which can be incorporated into very interesting pieces. I previously wrote about the deep audience reactions provoked by the AI-enabled robotic artwork Can’t Help Myself; electronic music has long relied on technologies similar to those in AI training stacks, and newer waves of artists even train their own data sets for use in music production. Plenty of other artists have incorporated or thematized machine learning algorithms into their larger exhibitions. AI technologies can indeed be mediums through which to ideate and realize great works of art.

However, commercial Chatbots and image generators as they currently exist do little to promote this kind of artistic engagement. They instead function in a mostly parasitic relation to prior works of art. They ask very little of those who fancy themselves “artists” after merely entering some prompts in a box. Prompt engineering may take some skill, but it’s nothing compared to those who spent years honing their crafts.

Flooding the zone with AI-generated slop-art only further mucks up our already toxic information ecosystem on the one hand and crowds our feeds with useless timewasters on the other. And if you think its bad now, just wait until social media platforms find ways to firehose an “infinity content” of AI-generated personalized brand advertisements targeted to each individual one of us based upon our personal data.

Of course, I don’t expect any of this to register with the wider community of tech bro entrepreneurs who, for the most part, don’t really care about, understand, or appreciate art in the first place. Indeed, one may only look at their own engagements with art to understand precisely why they seem so completely perplexed on the matter. It all boils down to one simple fact:

Most Tech Bros are Poor Readers

To close, I want to turn to the poem after which Amodei christens his post, as I think it is instructive as to precisely how these guys think about and relate to art.

I want to argue that Amodei’s uncritical adoption of only the most obvious utopian surface-elements of this poem reveal him as a poor reader. In particular, Amodei fails to consider that not only may the intentions of the poet and the speaker be incongruent, but that the poem itself may even in some senses militate against both; it fails to admit, in other words, that poems may live lives of their own.

Perhaps because of this, Amodei fails to detect the naivete of the poem’s speaker, whom the poem itself parodies formally by introducing parenthetical asides in the second line of each strophe: “(and the sooner the better!)” / “(right now, please!)” / “(it has to be!).” The speaker is not only naively impatient, but dreams of a world in which all human responsibility—towards nature, towards humanity, even towards computers—is outsourced to silicon-based shepherds. Does this return us to nature, and if so what does it mean for humans to inhabit an ontological and ethical plane of equivalence with cattle and sheep?

Amodei seems not to have considered the implications of the speaker’s interjections, which evoke both a religious diction and a prayerful cadence, culminating in the replacement of a watchful God with “machines of loving grace.” What does it mean for man to “build God” from machines and install them in God’s place? Is this just a way of disavowing our own responsibility as God’s appointed stewards of nature? One thing’s for sure—Brautigan believed it was impossible to find happiness in a polluted environment, and would therefore be deeply unhappy with the astronomical levels of energy usage and pollution created to generate AI text and images, which is in turn used to pollute the information environment he expressed such high hopes for.

All of this makes the poem itself ambivalent, and perhaps even totally suspicious, of the utopian dream fashioned by its own speaker’s—and indeed perhaps even its author’s— naïve lack of patience. Is this a utopia in which computers have freed humanity from toil and Man has therefore overcome his alienation from nature? Or, as is the case with nearly all sci-fi literature, is this a dystopia-in-disguise, in which Man has been relegated to the status of mere cattle?

It seems, in fact, that Amodei is such an unsophisticated exegete that he has failed to consider that the intentions of the author, the prayers of the speaker, and the semantic engine of the poem itself may not in fact all align. Brautigan was a serious poet, and no doubt presumed a sensitive, skeptical, and deep-delving reader capable of dwelling in the tensions and contradictions of the text, exerting their own imaginative agency through the poem, and thus allowing the poem to live a life independent even from the supposed intentions of its creator (which, by the way, we cannot every really presume to know). It’s true that Brautigan had sometimes expressed (in my view, similarly naïve) techno-utopian sentiments related to information, but many of his poems also express anxieties about the potential for technology-driven human hubris. Do we really think that he wouldn’t want us to think for ourselves, especially about the multiplicity of things his own work could mean?

A good reader is capable of pushing back against their own first impressions of a work of art, thereby infusing their own self-reflexive consciousness into a poem and taking responsibility for his or her own reading. Such responsibility requires patiently and probingly reading against the grain of the poem’s own presumed innocence, as well as our own default assumptions about it, ourselves, and the world—a point I’ve made in a previous post on why Chatbots are themselves such poor literary interpreters.

Amodei, however, is an unsophisticated, perhaps even opportunistic reader. For Amodei, the allusion to Brautigan’s poem is just a cute accoutrement, a clever way of showing off that he’s well-read and clever. He cites the poem not to thoughtfully plumb its depths for insight, but rather for the cultural capital it lends him. Its value to him is, in other words, strictly utilitarian.

Indeed, he is not alone in this: often, tech entrepreneurs reach for genres like sci-fi not to engage in them as literature, but because they see sci-fi as a treasure trove of potential product ideas. They seem either incapable of grasping or unconcerned with the fact that most of these works are not blueprints, but cautionary tales.

Like the poem’s naïve speaker, Amodei fails to attend deeply and read between the lines. More importantly, he refuses to take seriously the responsibility which all good poems invest in their readers. He lacks, in sum, a critical consciousness towards art. Perhaps this is why he and his ilk have so far not succeeded in building machines of loving grace, but rather firehoses of loathsome gaucherie.

As with the speaker of Brautigan’s poem, Amodei falls prey to what literary critics call “structural irony”: their naivete, ignorance, and self-interested reasoning make them unreliable narrators, a fact which they themselves may not even be aware. In this case, both are overly eager to rush forward into a future the implications of which they cannot possibly imagine. In both cases, we do not yet know how the stories will play out, but we have a good idea that their hopes are too naively unrealistic to take too seriously. This, not because they are insincere, but because they are too impatient for success and too enamored with their own dreamy visions to practice self-reflection and caution.

In this sense, Amodei is not so different than other tech-entrepreneurs; in their mad rush to realize speculative futures, they are all too willing to move fast and break things. And what better set-up for a dramatic fall from grace than a well-meaning, powerful man undermined by the hubris of his own impatience?

Let’s just hope they don’t take the rest of us, and our cultures, down with them.

In closing, let me offer in response to Amodei another poem by Richard Brautigan, to which I think we can all relate: